This blog is for the students and instructors to continue the conversations on the role of information technology in modern corporations during the second decade of the 21st century. Please feel free to join the conversation by commenting on our discussions.

Wednesday, March 31, 2010

Publishers Optimisitc about iPad

http://www.cnbc.com/id/36123292

Cloud Computing for Competitive Advantage

I would like to look at the same question from the demand side. There are CIOs of companies out there who feel that its going to be difficult for Cloud Computing to be able to provide differentiated solutions to companies, given its current technical architecture, hence it is most probably a parity game, and not a differentiation strategy. I kind of agree to this thought process. Here's the reason why.

In a Cloud setup, we could talk about Infrastructure-as-a-Service Clouds, Platform-as-a-Service Clouds or Software-as-a-Service Clouds. When we talk about Infrastructure, it is mostly a Parity game, the reasons being obvious. When we talk about Platforms or Software as a service, we could argue that differentiated solutions could be provided at these layers. But, Software as a Service solution is mostly provided through a multitenant architecture, and in a multitenant architecture, you have very little scope for differentiation. Hence, you would still need customized, specialized applications (different from, and on top of the core SaaS applications) that would be able to provide differentiated value. Hence, SaaS by itself, probably would not be able to provide competitive advantage.

One way in which cloud computing would help, at least in the near future, is by helping outsource all the mundane, routine or "parity" activities, helping the CIOs/CXOs to concentrate their resources on more differentiated activities. But Cloud Computing in itself, I believe, would provide little differentiation capabilities, at least in the near term, to the demand side of companies.

Please feel free to critique this thought process ...

Security: Hacking passwords is easy

Statistically speaking that should probably cover about 20% of you. But don’t worry. If I didn’t get it yet it will probably only take a few more minutes before I do…

- Your partner, child, or pet’s name, possibly followed by a 0 or 1 (because they’re always making you use a number, aren’t they?)

- The last 4 digits of your social security number.

- 123 or 1234 or 123456.

- “password”

- Your city, or college, football team name.

- Date of birth – yours, your partner’s or your child’s.

- “god”

- “letmein”

- “money”

- “love”

Next, what doesnt get hacked by the tricks above can be broken using a Brute Force Attack pairing random usernames with known words and number combos.

So, all we have to do now is unleash Brutus, wwwhack, or THC Hydra on their server with instructions to try say 10,000 (or 100,000 – whatever makes you happy) different usernames and passwords as fast as possible.The success of using Brute Force attacks depends on the length and complexity of the password as shown in the table:

| Password Length | All Characters | Only Lowercase |

|---|---|---|

| 3 characters 4 characters 5 characters 6 characters 7 characters 8 characters 9 characters 10 characters 11 characters 12 characters 13 characters 14 characters | 0.86 seconds 1.36 minutes 2.15 hours 8.51 days 2.21 years 2.10 centuries 20 millennia 1,899 millennia 180,365 millennia 17,184,705 millennia 1,627,797,068 millennia 154,640,721,434 millennia | 0.02 seconds .046 seconds 11.9 seconds 5.15 minutes 2.23 hours 2.42 days 2.07 months 4.48 years 1.16 centuries 3.03 millennia 78.7 millennia 2,046 millennia |

The implications of this are immense. As more info is being put on the cloud, we will need passwords to access our data. If our passwords are easily hacked, cloud services companies like Amazon or Google will need to come up with better means of authenticating you are actually who you say you are.

On-Demand versus Cloud-Computing: Poll

Go ahead and participate and let us see how your opinions stack up..

Tuesday, March 30, 2010

Gmail is now a Platform!

Media and Entertainment - Digital Content Providers - Group Post

We analyzed the key players in digital content distribution: Amazon, Comcast, Hulu, Netflix, TiVo and Youtube.

We analyzed the key players in digital content distribution: Amazon, Comcast, Hulu, Netflix, TiVo and Youtube.3 - Mobile video

According to a recent Morgan Stanley report, mobile video distribution is set to increase 66x its current levels. Mobile video traffic will account for 64% of the total mobile bandwidth. Video content owner and distributors will be fighting to monetize this new market. The key players do not want to miss the huge opportunity and are all set to provide their services on the Mobile phone (e.g. Youtube on mobile, Comcast mobile, TiVo mobile etc). Considering the small size of the mobile screen the offerings are limited. However, the term mobile may mean different to different people (with Steve Jobs statement on more mobile devices sales than sales of Nokia)!

CDMA iPhone to be Released in June

Apple has just announced that they will be creating a new iPhone which will work on a CDMA network, which the same type of network that both Verizon and Sprint use. This really opens the door for Verizon and will allow them to compete more effectively with AT&T, who have 43% market share of U.S. smart phone customers primarily because they are currently the exclusive provider of the iPhone. Usage of the iPhone has overwhelmed AT&T's networks leading to calls being dropped and lost data, leading to a huge amount of complaints from their customers. However, with Apple's 3 year exclusive arrangement with AT&T coming to an end. consumers now have hopes to experience the iPhone on the Verizon network, which has arguably the best network currently in the United States. This is a huge deal for Verizon because despite the fact that they are the best network on a number of different levels, they haven't been able to offer many consumers what they want, which is the iPhone, because of AT&T's exclusive. Its going to be very interesting to see how this all pans out. I'm especially interested to see how Verizon's network handles the deluge of data that comes with use of the iPhone, only time will tell.

Monday, March 29, 2010

Saturday, March 27, 2010

iPhone: Line2 allows users to make WIFI calls

The NY Times reports about a new iPhone app called Line2. The app allows iPhone users to receive phone calls over a WIFI connection, set up a new number (ala Google Voice) but also set up call routing. Small businesses can set up prompts (Press 1 for Accounting, 2 for Sales department, etc.).

Most interestingly, is that this app is still available. Apple reported that they blocked Google Voice because Google's app replaced, "Apple's user interface with its own user interface for telephone calls." However, Line2 uses the iPhone's native interface and phone technology so its not replacing anything, other than AT&T.

How much longer can this app survive? Especially considering that AT&T is the one being hurt here, and they are also subsidizing the hardware.

Thursday, March 25, 2010

Microsoft-Apple-Google: Fighting and Winning Together!

Wednesday, March 24, 2010

Telecom Industry - Group Post

1) Cisco makes the infrastructure hardware.

2) Ericsson and Alcatel-Lucent buy Cisco's hardware (and create some of their own) and create and manage the network infrastructure

3) AT&T and Bharti Artel contract to either buy or lease the network and then allow consumers to use it to make calls and data connections

4) Sony-Ericsson makes the phones that actually make the calls and data connections. This layer also provides the application platforms for developers to use to create apps that consumers use to make data connections.

When studied independently, each layer has different challenges unique to itself. However, when taken in aggregate, one major problem emerges. Namely, the current revenue business model is not sustainable. The largest area of growth is in the phone and app layer. Consumers have shown a willingness to spend more money to get a more feature-rich experience. However, the costs that need to incur to support this experience are required lower in the stack. The network needs to be upgraded to support the proliferation of data connections but the companies that need to make this investment are not the companies that stand to benefit the most from its use.

Tuesday, March 23, 2010

Facebook Founder Turns Focus on Philanthropy

As reported in this article in The Chronicle of Philanthropy, Chris Hughes, one of the co-founders of Facebook, has announced that he will be launching a new website this coming fall with the purpose of helping people connect with nonprofits and causes that resonate with them. “Jumo—which means ‘together in concert’ in Yoruba, a West African language—will be designed to take advantage of content that has already been created elsewhere and offer robust tools for sharing content, says Mr. Hughes. ‘The last thing I want to do is add yet another site to a nonprofit’s plate,’ he says. ‘I don’t want them to have to go to yet another destination to share who they are and the work that they’re doing.’” Jumo has been registered as a nonprofit and is in the process of applying for tax-exempt status from the IRS, while also working to raise over $2 million. The Jumo concept has secured $500,000 through individual donations and is seeking grants from the likes of Ford and Rockefeller Foundations.

Our Fundraising Sector Team has discussed the evolving but still fragmented world of online giving. The Jumo website plans to not only aggregate causes, but also track, analyze, and aggregate donor perspectives (gathered through a variety of questions posed on the site), which can then be used to steer donors to causes that will resonate the most with them. These purposed methods would take the current donor aggregator and cause platform models one step further. However, a few of many questions that arise are:

- Will a separate website draw a critical number of more interested and motivated viewers to offset the benefit of sharing nonprofit/cause postings on Facebook, where there are many more albeit often less motivated viewers?

- What will be the vetting process before allowing nonprofits, causes, and volunteer and advocacy opportunities to be posted on the website?

Monday, March 22, 2010

Google and China

This article further examines what that means for Google and China. Google was seen as an innovator that was very influential in the development of the Chinese Web, but its four-year experiment to bring freedom of information to China's 350 million Internet users ended Monday, when it shut down most of its mainland operations and began redirecting users to its Hong Kong-based site.

The remaining mainland operations came under pressure from Chinese officials and Google partners. China Mobile had planned to use Google on its mobile Internet page, but that deal has been scrapped.

The government does not acknowledge to its citizens that it controls information on the Internet, and claims that it offers the same information that the rest of the world can access. Google challenged this idea, and decided it would no longer play along with the cherade.

Any company that deals in information must now realize that the Chinese will place politics above economics, and any attempt to resist censorship may affect a company's very existence in China, as well as strategic partnerships with Chinese companies.

Music Subscription Services: Not for Me Yet...

Sunday, March 21, 2010

Energy Sector: Group Blog Post

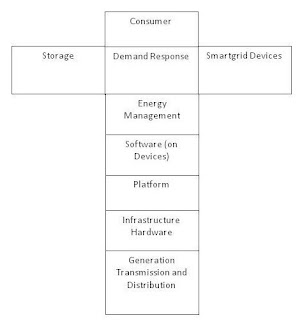

Our project is focused on the smart grid stack and the effect of the emerging smart grid technologies and business models will have on the larger energy industry. Figure 1 shows our preliminary stack analysis of the smart grid sector.

Figure 1: Smart Grid Stack

A smart grid delivers electricity from utilities to commercial and residential consumers using two-way digital technology. Using this digital communication, utilities can change their pricing to better reflect the true cost of energy at the time the energy is consumed. Consumers can change their energy usage to take advantage of this new pricing scheme. Device manufacturers build machines that automatically react to information generate by the smart gird. Consulting firms (in the Energy Management layer) help large energy consumers get maximum benefit from their energy decisions. Platform providers provide software that allows consumers, consultants, and devices collect and organize the information generated from the smart grid. The Generation, Transmission and Distribution layer is largely integrated by utilities and represents the smart grid’s interaction with the larger energy industry stack.

Integration of Smart Grid

Initial implementation of the smart grid infrastructure is being established by existing utility companies within their service areas. The initial smart grid suffers from a lack of standards that would allow for information sharing. While the free flow information would yield the greatest benefit to energy efficiency and end consumers, it may not be in the best interests of utilities to establish an open and integrated smart grid because such a smart grid could shift profitability to the platform and energy management layers.

The long term trend will be to move towards an integrated smart grid but how quickly that integration occurs creates two scenarios. Under the Slow Integration Scenario, the utilities keep their individual smart gird networks closed and unconnected. Under the Fast Integration Scenario, standards are set for hardware and software so that information can be freely shared within and between smart grid networks.

Information Security

The extension of two-way digital communications could make protecting the smart grid from a cyber attack very challenging. The smart grid would depend on computer-based control systems. These systems will be increasingly connected to open networks such as the internet, exposing them to cyber attacks. Any failure of our electric grid, whether intentional or unintentional, would have a significant and potentially devastating impact.

We cannot rule out terrorist attacks in the form of hacking into the control systems of the grid. Even a small manipulation of the system frequency can cause blackouts and damage equipments. So security risks need to be addressed properly to prevent billions of dollars of potential cyber attacks.

To mitigate the risks, a minimum of four layers of physical security must work to complement each other in the Smart Grid. The four layers are: 1) environmental design 2) mechanical and electronic access control 3) intrusion detection and 4) video monitoring. Some experts have suggested that monitoring transmission lines by satellite will be required.

Cross Industry Disruption

A quick look at the energy industry stack shows us that IT companies that have the scale of operations and the financial power to succeed have penetrated the energy industry. This has resulted in the blurring of industry boundaries. With the help of software solutions such as Google PowerMeter and Microsoft Hohm, these IT companies are creating and capturing value in the lucrative services layer of the energy industry stack. The entry of these companies carries the threat of commoditization for GE Energy’s core energy infrastructure products.

GE Energy appreciates the effort required to build competencies in Information Technology and to open a front against the established players such as Google and Microsoft. GE Energy is exploiting the disruptions caused by IT companies in the energy sector through strategic partnerships to drive growth in its core energy infrastructure and appliance businesses. GE Energy is using its current core energy infrastructure products and its consulting services as rich sources of cash. It then is using this cash to fund start-up companies that are leaders in the smart grid space. It is also using these ample resources to promote its smart meters, influence government policy and to promote the smart grid in a big way.

Strategic Partnerships

As the value in energy industry is shifted from traditional utility companies to more service oriented (storage, smart grid applications, etc.) companies, there will be two interesting battles in near future:

1. Standards: Standards for storage technology, smart grid device configurations (for the two way data flow), and finally, which platform will be the winner

2. Partnerships: Utility and device making companies have already begun fighting over strategic partnerships. They understand that the key to win in the new market dynamic is to influence winners in as many layers as possible. Notable partnerships are GE (device layer) with A123 systems (storage layer).

As most of our team identified “strategic partnerships” as a recommendation for our respective companies, we will focus on this issue in detail. We intend to focus on beneficiaries/losers of such partnerships and the effect of their subsequent reaction on the overall industry dynamic in the coming decade.

The Loyalty of E-News Readers Heats Up the Battle Between Facebook and Google

Last week Facebook surpassed Google as the most visited website in the United States. This is of particular interest to providers of online news providers (as well as my team for this project who are focusing on publishing) as they need to know where to focus advertising efforts. However, as important as volume is loyalty and it appears that Facebook is also winning on this front. Another recent study showed that not only does Facebook have more users, but they tend to be more loyal than Google. The article noted that “[a]mong the top five print media web sites for the week ending March 6, 2010, 78 percent of Facebook users returned to that site to consume more news, versus only 67 percent of Google News users making return visits.”

A key theme for our project is the organizational relationship between the physical and digital world. All publishers, whether they are publishing books, news, magazines or textbooks, are struggling with developing new revenue models as content is becoming digital. Online news providers are hit especially hard as users are used to having this service provided for free. News sites are concerned that if they start charging, people will just shift to another site. It appears however that most people who use the internet for their news are not particularly promiscuous with their sources. A recent article in the New York Times reported that “only 35 percent of the people who go online for news have a favorite site, and just 21 percent are more or less “monogamous,” relying primarily on a single Internet news source…but 57 percent of that audience relies on just two to five sites.” However, “just 7 percent of people said they would be willing to pay for access to any news site. And even among the people who are most loyal to a single site, only 19 percent said they would pay, rather than seek free news somewhere else.”

Online news providers are in a classic prisoner’s dilemma in terms of charging for content. However there could be interesting new advertising opportunities for these providers if they are able to take advantage of the fact that 57% of readers are visiting less than 5 sites, coupled with the loyalty associated with Facebook users. Regardless, it’s clear that the battle between Facebook and Google is heating up even more as each tries to prove that clicks originating from their site will ultimately prove more valuable.

Saturday, March 20, 2010

A cyberwarrior and the smart grid

Jianwei said his intention was to show vulnerabilities in the network as a way to learn how to make it more stable, but some think his paper shows China's interest in disrupting the U.S. power grid.

As the U.S. creates a smart grid, it would seem that more and more vulnerabilities will arise. Information security will be an essential part of any smart grid infrastructure.

Thursday, March 18, 2010

Non-Profit Fundraising Sector

• Donor Management Software and Services: Salesforce, BlackBaud

• Donor Aggregators: Yourcause.com, Kiva, Charity Water

• Non-Profit Direct Service: Root Capital

Already IT has been transforming this sector and as we analyze its impact today and moving forward, here are a few of the key themes we have identified:

• Social Networking: Already social networking has played a big role in changing the tools of fundraising. On both Facebook and on more specialized social networks such as Yourcause.com, the range on donors has widened, bringing in more donations but also sometimes smaller donations. However it remains to be seen how to best tap into these social networks and whether there is a place for the specialized social networks.

• Donor Management Software: BlackBaud and Salesforce are two of the primary providers of Donor Management software, which is the CRM for non-profits. They are becoming more and more sophisticated in their applications and interfaces with different donor channels such as social networking. A key question is how the data will be used and how prevalent these systems will become among the non-profit fundraising sector.

• Reporting and Impact Measurement: With the IT systems provided by companies such as BlackBaud and Salesforce, the level of data available to non-profits is increasing however the usage of the data is still being developed. More and more non-profits are being asked to provide measureable outcomes and impacts in order to obtain the grants and donations that they survive upon. This new demand by foundations and donors is altering the way in which non-profits are being held accountable. This is being observed at non-profits such as Root Capital where they received a large grant but along with it, significant reporting requirements in terms of measureable impact.

• International Philanthropy – Online platforms have enabled new markets and new recipient of philanthropic giving to emerge. Kiva is a prime example that has linked donors from affluent areas with small business in struggling economies. Without the online platform providing the information and connection between these two groups, this giving would be less possible. The question remains as to what other populations may be connected for philanthropic purposes through online platforms.

Tuesday, March 16, 2010

Microsoft - Project Natal

What is Boku?

Tackling Bandwidth in the Next Decade: Public and Private Approaches

In the last two days there have been numerous stories around the US government’s – by way of the FCC – efforts to provide 50-100 MBS to 100 million citizens by the year 2020. In addition to this goal, the plan lays out five additional goals:

1. US should lead the world in mobile innovation;

2. Every American should have access to robust broadband services;

3. Every community should have at least one high-speed broadband access point;

4. Every first responder should have access to nationwide wireless network; and,

5. To support clean energy initiatives, US households should have access to a high-speed network to more efficiently manage their households

This will be made available through a ”revenue-neutral” model that will be funded through an auction of 500 MHz of spectrum, recently made available due to the switch of TV broadcasts from analog to digital.

On the other end of the spectrum (pun not intended), Google is soliciting offers from cities and towns to build “an advanced data network capable of downloading Internet data at one billion bits per second”. The purpose, as publically stated, is to see just what the public can do with really high-speed Internet access. However, for their efforts, Google will look to sell access to that network on a subscription basis and, at those speeds, will be able to completely blow away the competition (if users select solely on the basis of download speeds).

The government’s plan represents a 16x-20x increase over currently available speeds, which would be in-line with expectations espoused by proponents of the Bandwidth Law. The Google plan, if (a) feasible and (b) implemented, would obliterate the underlying tenets of that law and would pose a significant challenge to the overlap of bandwidth, storage, and processing. Google could certainly supplement and complement the adoption of Internet speeds this high through its storage capabilities; however, where would the processing power come from in order to enable users to make meaningful use of these speeds? It seems as though the governmental approach is much more in-line with expectations among the other necessary components of the bandwidth-processing-storage triumvirate in order to maximize the value of all three.

By the way, there are Facebook fan pages put up by the different municipalities, but I could not find any Buzz feeds related to this:

http://www.facebook.com/search/?q=google+high+speed+internet&init=quick

FCC plans for future of broadband

The government's plan also "seeks a 90 percent broadband adoption rate in the United States by 2020, up from roughly 65 percent."

This will create great competition among telecom and cable companies, as they rush to obtain new users and get government support for expansion to become first-movers in areas where residents simply do not have an inexpensive method for connecting to the Internet.

For instance, my parents live in rural Indiana, where cable lines doen't run. Sattelite access is available, but they considered it prohibitively expensive for their desired speed. They finally chose a wireless broadband plan from Verizon.

The CEO of Comcast actually praises the governnment plan, citing a "need for continued private investment in faster competitive broadband networks, and the importance of maintaining a regulatory environment to promote that investment."

Sounds like he expects some government money to help Comcast expand into new areas, or he might use the plan as evidence that regulators need to force broadcast television networks to auction off part of their spectrum for mobile Internet. This would give Comcast the opportunity to compete with Verizon and others in that space.

Who really owns data?

Most people tend to agree that it is ok that Amazon owns and keeps secret the data generated by consumer purchases and browsing history (think of the famous "users that bought this item also bought this item"). Similarly, most people are ok with Google targeting advertisements based on data they collect while you are logged in to Google's world. I wonder if the legality of this will change based on Toyota's recent problems.

As the article points out, Toyota has equipped every car produced since 2001 with a proprietary "black box" known as an EDR that records information about the state of the car 5 seconds before a crash through 2 seconds after a crash. Toyota has repeatedly denied access to this data to crash victims and survivors. With the recent recall of 8 million Toyota vehicles, and the US government and consumers demanding answers to unexplained acceleration problems, it is very likely that Toyota will be forced to provide access to this data in the future.

If a company like Toyota can be legally forced to provide access to data that was generated through interactions with its product, I wonder if the precedent will carry over to companies like Amazon and Google that derive much of their competitive advantage from similar proprietary data.

Saturday, March 13, 2010

MySpace user data for Sale!

MySpace has put a large quantity of bulk user data up for sale on startup data marketplace InfoChimps. Analysis coming from the data could include things like music trends per zipcode, popular URLs being shared etc.

MySpace has put a large quantity of bulk user data up for sale on startup data marketplace InfoChimps. Analysis coming from the data could include things like music trends per zipcode, popular URLs being shared etc.